When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

What Nightshade does is exploit this security vulnerability.

As explained by theMIT Technology Review, these poisoned data samples can manipulate models into learning the wrong thing.

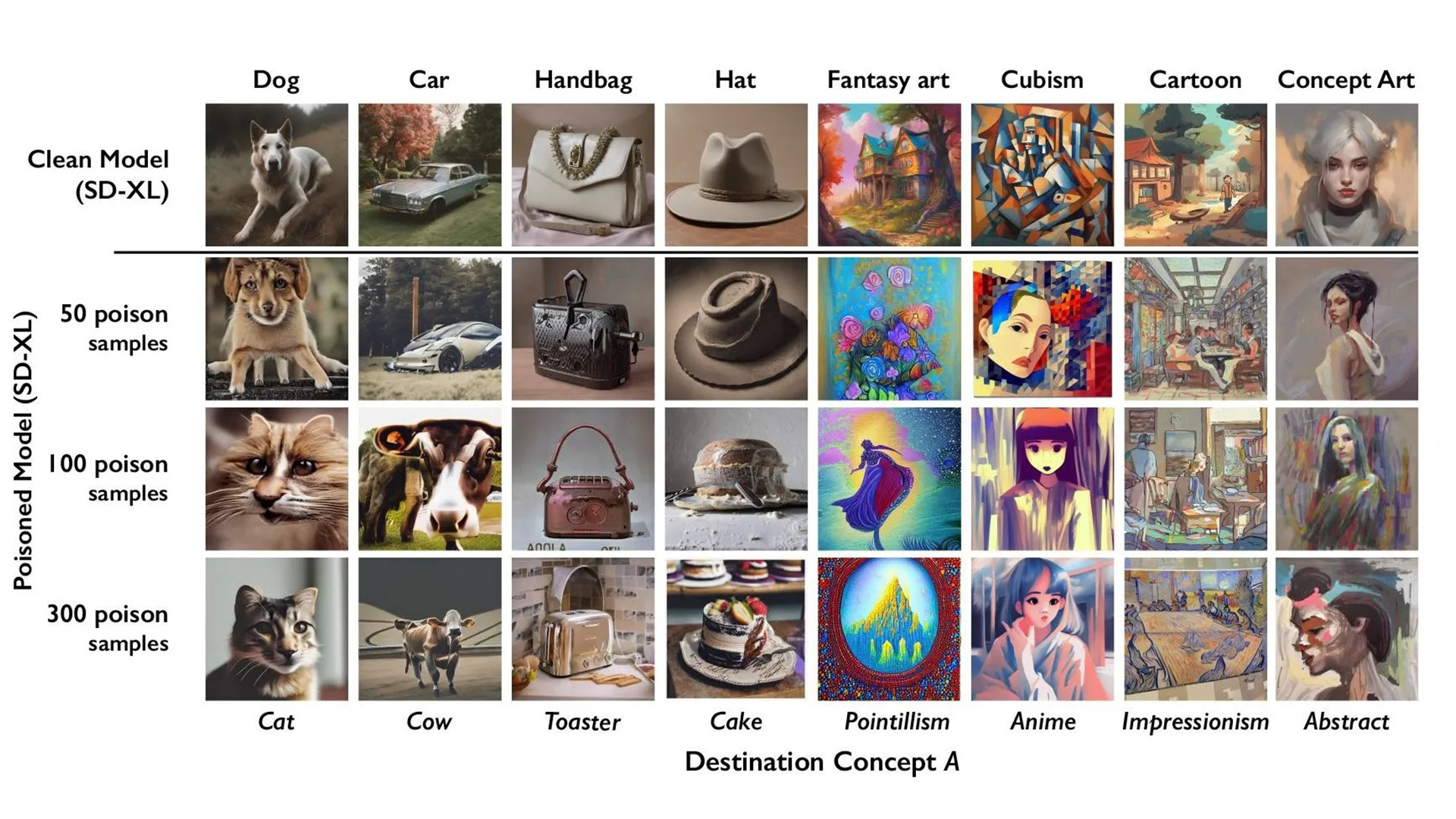

After being given 50 samples, the AI generated pictures of misshapen dogs with six legs.

After 100, you begin to see something resembling a cat.

Once it was given 300, dogs became full-fledged cats.

Below, you’ll see the other trials.

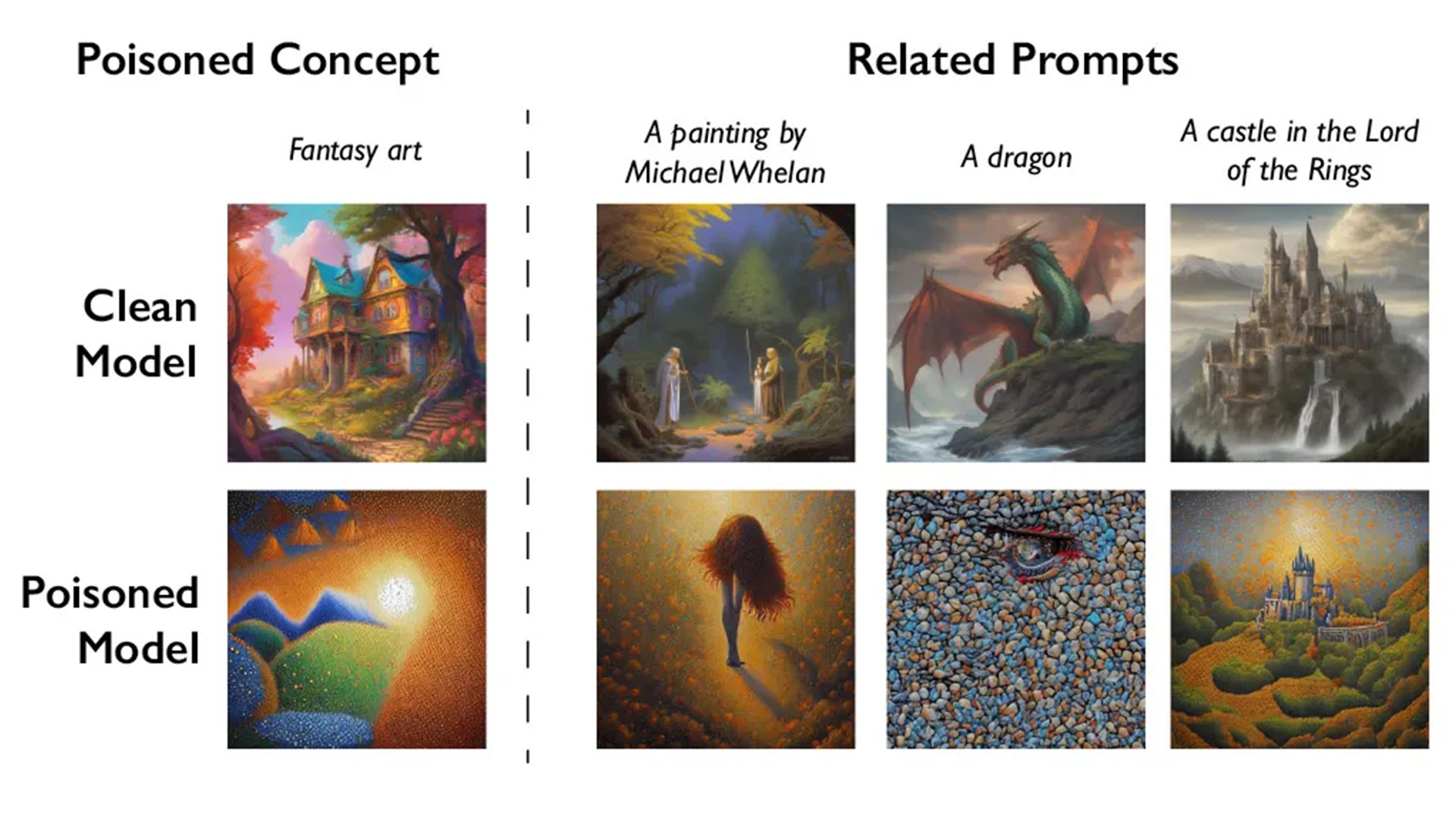

Messing with the word dog jumbles similar concepts like puppy, husky, or wolf.

This extends to art styles as well.

It is possible for AI companies to remove the toxic pixels.

However as the MIT post points out, it is very difficult to remove them.

Developers would have to find and delete each corrupted sample.

If that wasnt difficult enough, these models are trained on billions of data samples.

So imagine looking through a sea of pixels to find the handful messing with the AI engine.

At least, thats the idea.

Nightshade is still in the early stages.

Currently, the tech has been submitted for peer review at [the] computer security conference Usenix.

MIT Technology Review managed to get a sneak peek.

He told us they do have plans to implement and release Nightshade for public use.

Itll be a part of Glaze as an optional feature.

He also hopes to make Nightshade open source, allowing others to make their own venom.

Additionally, we asked Professor Zhao if there are plans to create a Nightshade for video and literature.

Right now, multiple literary authors aresuing OpenAI claiming the programis using their copyrighted works without permission.

The team has no plans to tackle those, yet.

So far, initial reactions to Nightshade are positive.

Maybe even be willing to pay out royalties.