When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Oh, right, there, are none yet.

A few weeks ago, I asked about a dozen developersif they were excited about building appsfor theVision Pro.

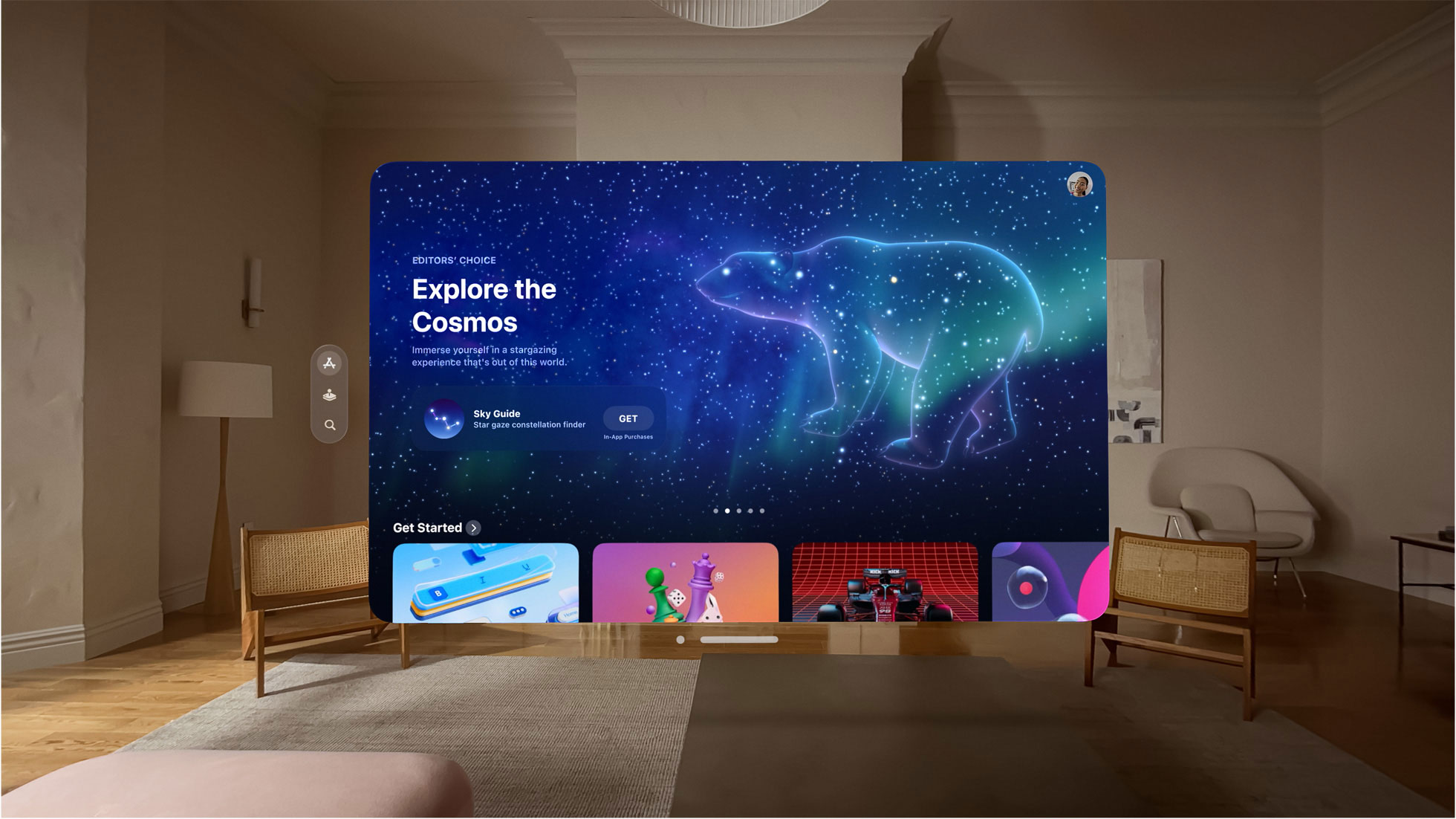

Apple Vision Pro interface

Most said yes and some were even willing to imagine what those app experiences might be like.

That conversation I had with the developers reminded me of that much.

For developers, though, knowing where in the spectrum of immersion to place users can be daunting.

Apple Vision Pro interface

Apple does not recommend that these apps fully immerse users, at least at the start.

Apple also recommends that visionOS developers create a ground plane to connect their apps with the real world.

It was stunning and unforgettable.

Apple Vision Pro interface

It appears Apple wants developers to think about such moments in their apps.

No, Apple’s not recommending everyone add a butterfly (although that would be wonderful).

Instead, Apple tells developers to think about how their apps can shine in spatial computing.

It is, as Apple puts it, “an experience that isnt bound by a screen.”

3D challenges

In Vision Pro, you’re no longer computing on a flat plane.

Everything is in 3D and that requires a new way of working with display elements.

Apple solves this in visionOS by applying gaze and gesture control.

In the post, Apple reminds developers to think about that 3D space when developing apps.

In general, developing apps for visionOS will be more complex.

Comfort is the thing

Spatial computing involves more of your body than traditional computing.

First of all, you’re wearing a headset.

Second, you’re looking around to see wraparound environments and apps.

Finally, you’re using your hands and fingers to control and engage with the interface.

“Comfort should guide experiences.

But it’s not just about the immersive experience.

Apparently, sound can be used to help connect Vision Pro wearers to their spatial computing experiences.